Price: $689.00

(as of Oct 08, 2024 14:43:28 UTC – Details)

Product Description:

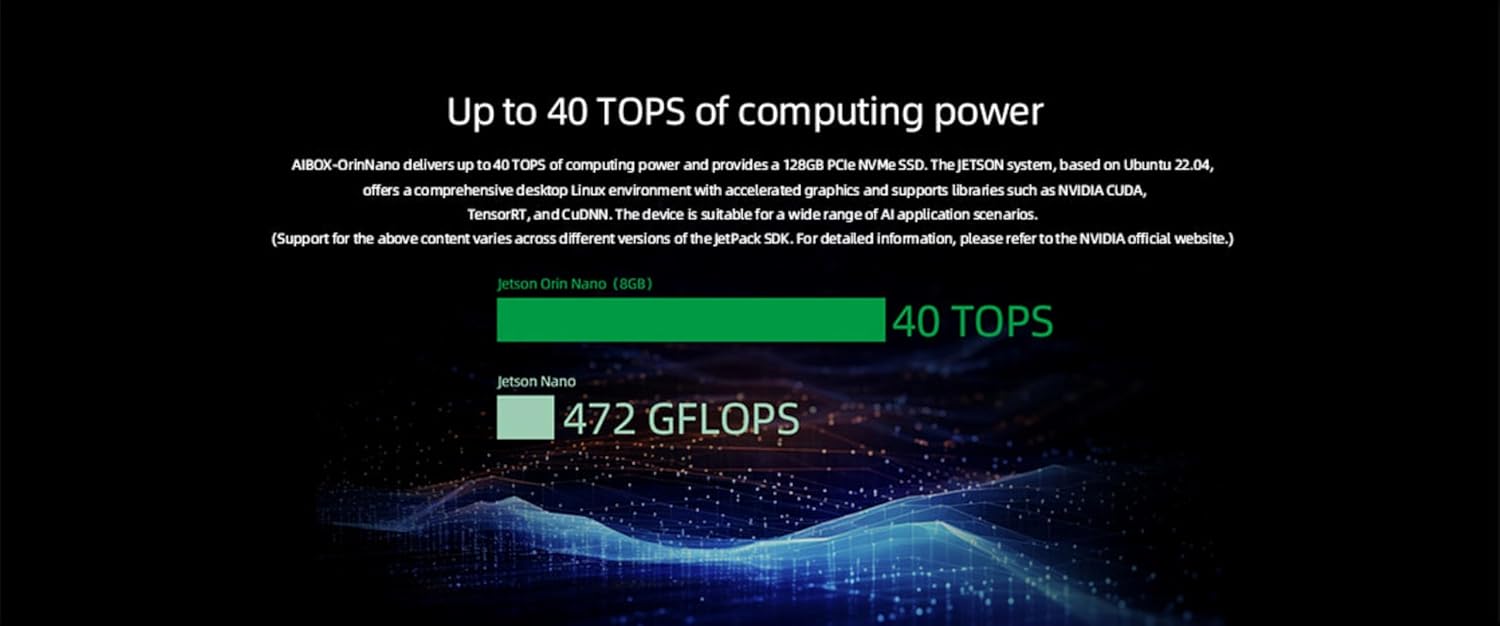

AIBOX-Orin Nano is a high-performance AI edge computing module featuring Jetson technology, offering 40 TOPS of AI processing power. It’s equipped with an Ampere GPU and a 6-core Arm CPU, supporting up to 8GB LPDDR5 memory for running complex AI models efficiently. Ideal for robotics, smart cities, and industrial automation, this module is a powerhouse in a small form factor.

Key Features :

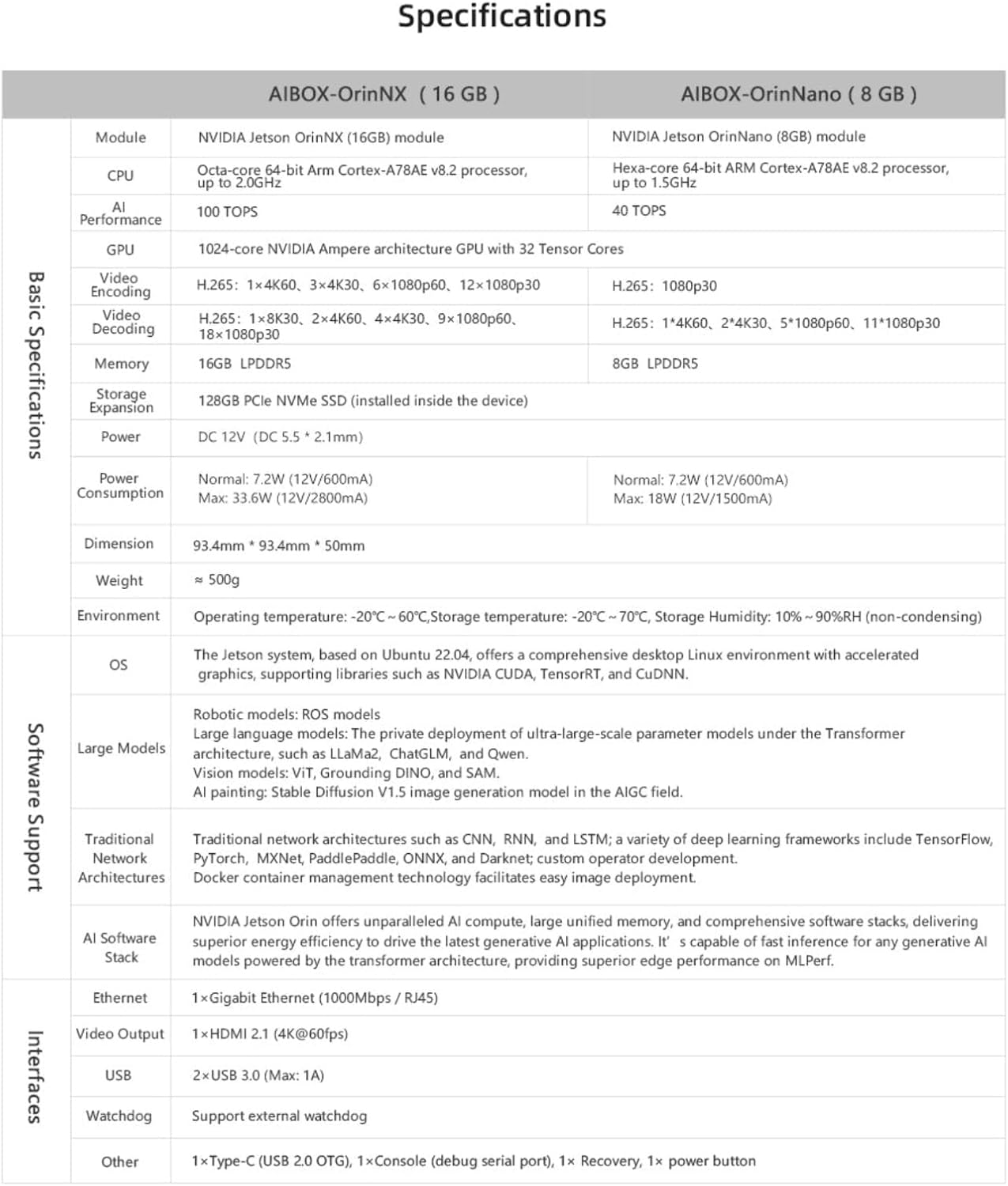

- Module:

- Jetson Orin Nano (8GB) module

- CPU:

- Hexa-core 64-bit ARM Cortex-A78AE v8.2 processor up to 1.5GHz

- GPU:

- 1024-core Ampere architecture GPU with 32 Tensor Cores

- Memory:

- 8GB LPDDR5

- Storage:

- 128GB PCle NVMe SSD (installed inside the device)

- Power:

- DC 12V(DC 5.5 * 2.1mm)

- OS:

- Based on Ubuntu 22.04, The Jetson system offers a comprehensive desktop Linux environment with accelerated graphics, supporting libraries TensorRT, and CuDNN.

Tech Support: youyeetoo Discord or forum. youyeetoo.com

Communication and customized consulting:

am2 @ youyeetoo DOT com

[Product Overview] AIBOX-Orin Nano is a high-performance AI edge computing module featuring Jetson technology, offering 40 TOPS of AI processing power. It’s equipped with an Ampere GPU and a 6-core Arm CPU, supporting up to 8GB LPDDR5 memory for running complex AI models efficiently. Ideal for robotics, smart cities, and industrial automation, this module is a powerhouse in a small form factor.

[Package Content] 1 x AΙBOX-Orin Nano ,1 x 12V3A Power Adapter ,1 X Type-C 2.0 USB Cable

[Jetson Orin Nano Module] The AIBOX-OrinNano, driven by the Jetson Orin Nano module, is an edge AI device. It boasts 1024 CUDA cores, 32 Tensor Cores for 40 TOPS performance, and a 1.5GHz hexa-core ARM CPU. it’s suited for complex AI tasks with support for 8GB LPDDR5 memory.

[Generative Al at the Edge] Jetson Orin offers unparalleled Al computing, large unified memory, and comprehensive software stacks, delivering superior energy efficiency to drive the latest generative Al applications. lt’s capable of fast inference for any generative Al models powered by the transformer architecture, providing superior edge performance on MLPerf.

[A Wide Range of Applications] AIBOX-Orin Nano is widely used in intelligent surveillance, Al education, services based on computing power, edge computing, private deployment of large models, data security, and privacy protection.

[Al Software Stack And Ecosystem] Jetson Orin Nano’s extensive AI software stack and ecosystem, featuring JetPack and Isaac ROS, simplifies edge AI and robotics development. It allows for the private deployment of large-scale Transformer models and supports traditional and deep learning frameworks. Custom operator development and Docker container management are facilitated, streamlining the integration of advanced technologies and accelerating AI application development and market deployment.